Our next topic is "Logic Programming and Prolog". This will be our last "new language" topic and it is arguably the most different language that you will encounter in PLC. Prolog enables some very unique programming idioms and admits some very short/clever programs, but be prepared to struggle with some of the intricacies of the language.

Preliminaries

Although we often think of "programming" as one thing, a careful look at the variety of programming languages and paradigms (and their historical origins) reveals that there are multiple notions of computation.

The Turing machine (circa 1936, by Alan Turing) embodies one notion of computation. The execution of a Turing machine, by reading and writing cells of a tape, captures the essence of imperative computation. The Impcore language from the beginning of the semester is one realization of this notion of computation as a programming language.

The λ-calculus (circa 1932, by Alonzo Church) embodies another notion of computation. The execution of a λ-calculus expression, by substitution of actual argument values for formal parameter variables, captures the essence of functional computation. The Scheme language is one realization of this notion of computation as a programming language.

Recall the Church-Turing thesis, which, in an informal usage, asserts that every notion of computation is equally powerful. The actual Church-Turing thesis is a little more subtle; for our purposes, we are simply observing that although Turing machines and the λ-calculus seem very different in how they perform computation, they are nonetheless very similar in a very deep sense --- any computation capable of being carried out on a Turing machine can also be carried out in the λ-calculus and vice versa. And it suggest that even more exotic notions of computation are nonetheless equally powerful.

Logical deduction (circa 325BCE, by Aristotle) gives rise to such an exotic notion of computation. The "execution" of logical deduction works by asserting axioms and inference rules (which are assumed to be true), posing goals (whose truth or falsehood are to be established), and attempting to prove or disprove the goals by applying the axioms and inference rules. Logic programming and the Prolog language (circa 1970 by Alain Colmerauer) is one realization of this notion of computation as a programming paradigm and programming language, respectively.

Logic Programming

Before looking more closely at the mechanics of logic programming, it is instructive to consider the philosophy of logic programming. Imperative (Impcore) and functional (Scheme) programming, despite their differences, emphasizes a how-approach to programming --- the programmer describes how a computation should be executed. For example, consider reversing a list: the programmer writes a function that manipulates the input list and builds an output list. A the end of the day, the function indeed outputs a list that is the reverse of the input list, but the programmer should verify that the output list actually does have all of the properties expected of it. Arguably, this is indirect: the programmer knows what she wants (the reverse of the input list), but she had to describe how to produce what she wants. Logic programming (Prolog) emphasizes a what-approach to programming --- the programmer describes what constitutes an answer to the problem they would like to solve and the execution of the program attempts to find such an answer. Indeed, logic programming languages have been described as fifth-generation programming languages, raising the level of programming above previous generations of languages.

Writing and Executing a logic program

Broadly speaking, logic programming is comprised of two steps:

-

Construct a collection of axioms and rules and pose a goal

-

Axioms: logical statements assumed to be true (i.e., assumed to be a fact)

-

Rules: logical statements that derive new facts (from existing facts)

-

Goal: logical statement to be proven true (or disproven (i.e., proven false))

-

-

System attempts to prove goal from axioms and rules

Within this framework, there are many design choices. Let’s consider a few of them.

Proving goals from axioms and rules

"System attempts to prove goal from axioms and rules" sounds straightforward, but can the system always prove (or disprove) any goal? Are there any other alternative behaviors? Because we are dealing with a notion of computation, it turns out that there are other behaviors. One possibility is that the system never terminates (i.e., enters an infinite loop), computing without ever proving or disproving the goal. One must also be very careful about understanding what "proven" and "disproven" mean in the context of a logic program. A goal that is "proven" can really be interpreted as "proven to be true" --- the system really will have found a way to justify the goal from the provided axioms and rules. However, a goal that is "disproven" cannot always be interpreted as "proven to be false"; instead, it must be interpreted as "cannot be proven to be true from the provided axioms and rules". We will return to this point (known as the closed-world assumption).

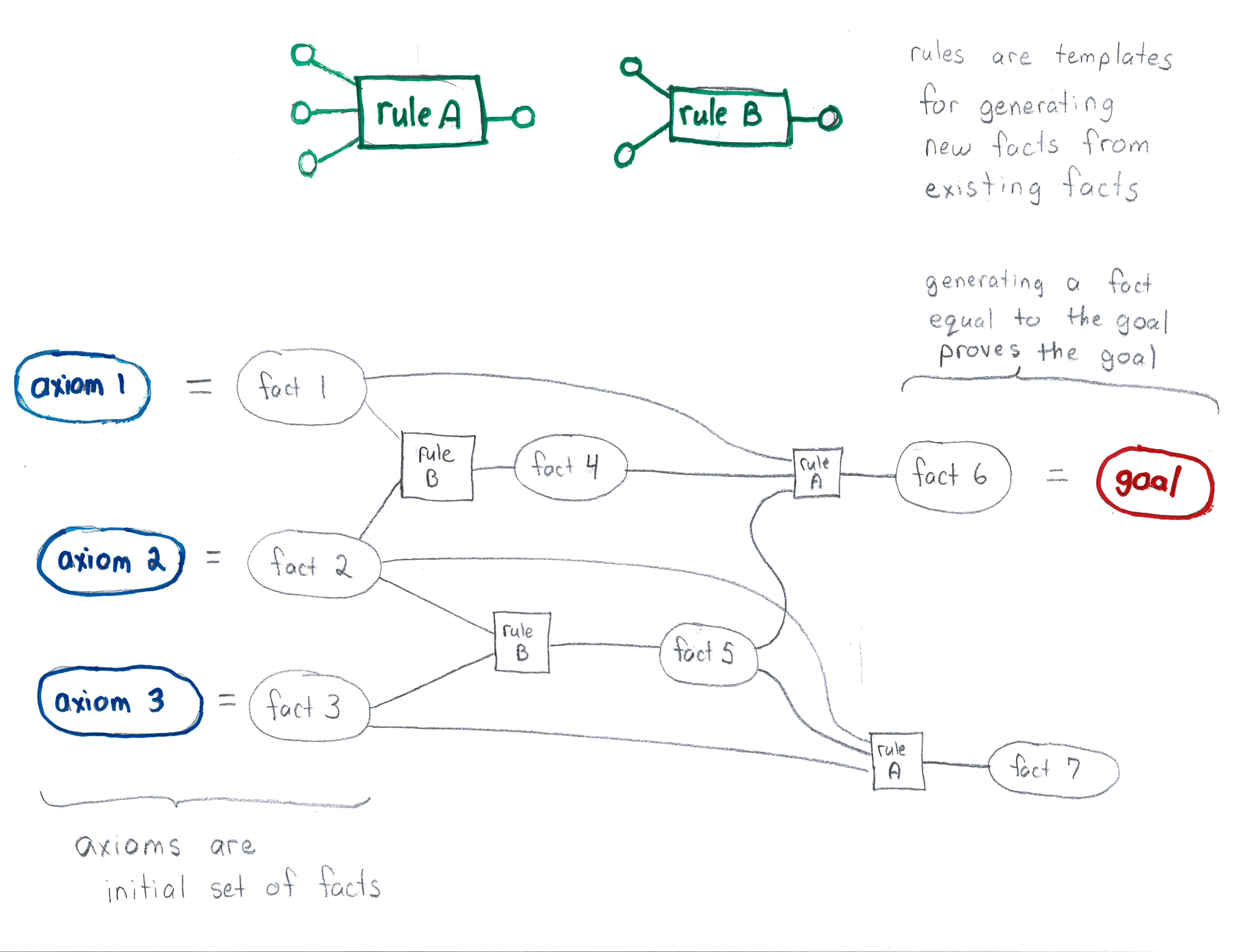

The next question to ask is what procedure the system will use in order to attempt to prove a goal from axioms and rules. The best way to think of this is that the axioms are an initial set of (proven) facts. Each rule is a way to use existing facts to generate a new fact. To prove a goal from axioms and rules, the system must show that the goal is a fact that can be generated by a procedure that starts with the initial set of facts (the axioms) and applies rules to generate new facts (using either the axioms or previously generated facts). Pictorially, a "proof" looks something like:

With this "picture" in mind, we can consider "proof search" to be an instance of a (particular kind of) graph search problem. The logic program (i.e., the collection of axioms and rules), implicitly defines a graph. The nodes of the graph are all possible logical statements (both ones that are true and ones that are false). The directed edges (technically, "directed multi-edges") of the graph go from one or more source nodes (logical statements) to a target node (logical statement) whenever there is a rule that derives the target node (logical statement) from the source nodes (logical statements). In general, this could be an infinite graph (with both an infinite number of nodes and edges). Now, a goal is provable if there is a (finite) path from (some subset of) the nodes that correspond to axioms to the node that corresponds to the goal.

There are a variety of different ways to search this graph.

-

Forward-chaining

Start with the axioms and "work forwards" to find the goal.

Now, apply either depth-first search or breadth-first search.

Because this is a potentially infinite graph, DFS is problematic. The system might start with some particular axioms and rules and "wander off", spending an infinite amount of time exploring the "wrong" part of the graph and never finding the goal.

On the other hand, BFS will eventually find the goal. However, BFS requires significant memory to keep track of the search (recall, that BFS must maintain the entire "frontier").

Yet another option is iterative deepening, the variant of depth-first search that performs DFS up to depth 1, then up to depth 2, then up to depth 3, …. Like BFS, this will eventually find the goal and will generally require less memory, but will require significant time.

One problem with all of the above searches is that it is unclear whether any particular rule applied to some facts is getting "closer" to the goal. Indeed, for axioms and rules that induce a graph with infinite paths, if there is no path from the axiom to the goal, then all of the search procedures will infinite loop, never able to establishes that the goal cannot be proven to be true from the provided axioms and rules.

-

Backward-chaining

Start with the goal and "work backwards" to find the axioms.

Intuitively, this is like reversing all of the edges of the graph and searching for a path from the goal to (some subset of) the axioms.

Each of the above search procedures (DFS, BFS, iterative deepening) can be used with backward-chaining, with the same pros and cons: DFS might infinite loop, BFS requires significant memory, and iterative deepening requires significant time.

While it is also the case that backward-chaining has the problem that it is unclear whether any particular rule applied "backwards" to a goal is getting "closer" to the axioms, in practice backward-chaining is much less likely to explore "wrong" parts of the graph. Also, with backward-chaining, although the axioms and rules may induce a graph with infinite paths, there are often many goals for which the search will terminate, which establishes that the goal cannot be proven to be true from the provided axioms and rules.

For proof search, Prolog uses backward-chaining with depth-first search for efficiency.

Writing axioms, rules, and goals

The next important question: What logic (language) for writing axioms, rules, and goals?

Most logic programming languages are based on first-order predicate logic:

-

Logic: statements composed of logical connectives and governed by formal proof rules

Example:

p ∧ q ⇔ ¬(¬ p ∨ q) -

Predicate: the atomic expressions (i.e., those that denote true or false) are relations among the objects of discourse

Examples:

-

limit(f, infinity, 0) -

enrolled(joe, csci_344) -

teaches(fluet, csci_344)

Note: We alone attach meaning to predicates. Within the logical system, predicates are simply structural building blocks with no meaning beyond that provided by the explicitly-stated relationships.

Note: Predicates are relations, not functions. Relations don’t care about directions (input vs. output). Consider, for example, the

teachespredicate above: for any given professor, there may be many courses that she teaches and for any given course, there may be many professors that teach it. This means that the flow of data is not one-way (p(x,y)vsf(x) = y).Using predicates/relations makes logic programming more flexible. By not having an "input" vs. "output" distinction, it is often possible to execute a logic program "backwards".

Although not exemplified by Prolog, predicates/relations are well represented by databases (with high capacity). In such scenarios, logic programming is suitable for expert systems (where there is a huge quantity of expert knowledge encoded as predicates/relations stored in a database).

-

-

First-order: ∀ and ∃ quantification over objects of discourse

In

∀ x. F,xis a variable,Fis a logical formula.For example, "All dogs go to heaven"

would be written∀ x. species(x, dog) ⇒ afterlife(x, heaven).The meaning of

∀ x. Fquantification is the (possibly infinite) conjunction of all instantiations ofF:

(species(snoopy, dog) ⇒ afterlife(snoopy, heaven)) ∧

(species(old_yeller, dog) ⇒ afterlife(old_yeller, heaven)) ∧

(species(cujo, dog) ⇒ afterlife(cujo, heaven)) ∧

(species(garfield, dog) ⇒ afterlife(garfield, heaven)) ∧

(species(bambi, dog) ⇒ afterlife(bambi, heaven)) ∧ …In

∃ x. F,xis a variable,Fis a logical formula.For example, "Some cat eats lasagne"

would be written∃ x. species(x, cat) ∧ eats(x, lasagne).The meaning of

∃ x. Fquantification is the (possibly infinite) disjunction of all instantiations ofF:

(species(puss_in_boots, cat) ∧ eats(puss_in_boots, lasagne)) ∨

(species(garfield, cat) ∧ eats(garfield, lasagne)) ∨

(species(felix, cat) ∧ eats(felix, lasagne)) ∨

(species(snoopy, cat) ∧ eats(snoopy, lasagne)) ∨

(species(bambi, cat) ∧ eats(bambi, lasagne)) ∨ …Exercise: What is the intuitive meaning of

∀ c. (∃ s. enrolled(s, c) ⇒ ∃ p. teaches(p, c))?Note that there are a number of logical equivalences that relate ∀ and ∃:

-

∀ x. ∀ y. F ≡ ∀ y. ∀ x. F -

∃ x. ∃ y. F ≡ ∃ y. ∃ x. F -

∀ x. F ≡ ¬(∃ x. ¬ F) -

∃ x. F ≡ ¬(∀ x. ¬ F)

But, note the following inequivalence:

-

∀ x. ∃ y. F ≢ ∃ y. ∀ x. F

Exercise: Think of formula

F(the simplest could bep(x, y)for some predicatep) that demonstrates the inequivalence. -

As noted just above, logical statements can be written in many (equivalent) ways. This is good for people: we can write a logical statement in the way that is most natural/understandable. But, this is bad for computers: it must handle all of the many ways that a logical statement could be written.

To address this, many computerized logic systems (not just logic programming languages) typically restrict the format of logical statements (so, humans/programmers may find that they cannot write a logical statement in the most natural/understandable way). But, by restricting the format of logical statements, some systems are able to prove theorems mechanically and logic programming languages are more efficient. In general a restricted format means that some, but not all, logical statements are expressible in the format; although the restricted formats used by logic programming systems are unable to handle many theorems from mathematics, there is a surprising about of power left.

-

Horn clauses (in conjunctive normal form)

Horn clauses (in conjunctive normal form) are a restricted form of first-order predicate logic formulas:

\[\forall x_1, x_2, \ldots. \forall y_1, y_2, \ldots. Q_1 \wedge Q_2 \wedge \cdots \wedge Q_n \Rightarrow Q_0\]Each \(Q_i\) is an atomic formula (a predicate applied to terms); that means that none of the \(Q_i\) can itself be a ∀, ∃, ∧, ∨, or ¬ formula. This is a proper restriction of first-order predicate logic: there are some first-order predicate logic statements that cannot be written as an equivalent statement that is a Horn clause.

Exercise: Think of a first-order predicate logic statement that cannot be written as an equivalent statement that is Horn clause.

In the above, we separated the \(x_i\) variables from the \(y_i\) variables, because we typically think of the \(x_i\) variables as the variables that occur in \(Q_0\) (and possibly in \(Q_1\), …, \(Q_n\)) and the \(y_i\) variables as the variables that only occur in \(Q_1\), …, \(Q_n\) (and not in \(Q_0\)). With this understanding the above is logically equivalent to:

\[\forall x_1, x_2, \ldots. (\exists y_1, y_2, \ldots. Q_1 \wedge Q_2 \wedge \cdots \wedge Q_n) \Rightarrow Q_0\]Sometimes thinking about a Horn clause with this form of quantification helps to understand the meaning of the formula.

Because the variables that occur in the \(Q_i\) atomic formula must be quantified in these (equivalent) ways, a Horn clause is typically written without any explicit quantification:

\[Q_1 \wedge Q_2 \wedge \cdots \wedge Q_n \Rightarrow Q_0\]But, remember that a Horn clause always has an implicit quantification of all of the variables that occur in the \(Q_i\) atomic formula.

For representation of axioms, rules, and goals, Prolog uses Horn clauses (in conjunctive normal form).

Prolog

Prolog (named for "PROgrammation en LOGique") was developed by Alain Colmerauer in the early 1970s:

-

~1970, Colmerauer & Kowalski: logic programming

-

1972, Colmerauer & Roussel: first Prolog interpreter

Since then, Prolog has evolved to an industrial-strength programming language.

-

1995: ISO Prolog standard

Although it is not the only logic programming language, it is the dominant and most widely used logic programming language. Parts of Watson, the IBM machine that played Jeopardy, were written in Prolog.

Like the relationship between Turing machines/imperative programming and Impcore and between the λ-calculus/functional programming and Scheme, the relationship between logic programming and Prolog is complicated. Essentially, in the interest of efficiency and practical programming, Prolog is not pure logic programming; rather, it is logic programming with some impurities.

Although the above introduces Prolog in the context of logic programming, another origin of Prolog was projects about man-machine communication:

Prolog Programs

Neighbors Example

Recall that uProlog works in two modes:

-

In rule mode, the prompt is

→and the interpreter (silently) accepts axioms or rules. These axioms and rules are maintained in the database. (For longer programs, though, we write axioms and rules in a file and load them into the interpreter.) -

In query mode, the prompt is

?-and the interpreter solves goals based on the previously entered axioms and rules.

The following transcript shows an interaction with the uProlog interpreter.

?- [rule]. ;; enter clauses (axioms and rules) -> ;; Axioms establishing who lives where. (1) -> address(adam, two, high_hill). -> address(beth, three, high_hill). -> address(cameron, four, high_hill). -> address(david, five, high_hill). -> address(edgar, six, high_hill). -> address(frank, seven, high_hill). -> address(greg, two, turk_hill). -> address(harry, three, turk_hill). -> address(ivan, four, turk_hill). -> address(john, five, turk_hill). -> address(kevin, six, turk_hill). -> address(lee, seven, turk_hill). -> ;; Many axioms for establishing that two "numbers" are "neighbors". (2) -> number_neighbors(one, two). -> number_neighbors(two, three). -> number_neighbors(three, four). -> number_neighbors(four, five). -> number_neighbors(five, six). -> number_neighbors(six, seven). -> number_neighbors(seven, six). -> number_neighbors(six, five). -> number_neighbors(five, four). -> number_neighbors(four, three). -> number_neighbors(three, two). -> number_neighbors(two, one). -> ;; A rule for establishing when two people are neighbors. (3) -> neighbors(P1, P2) :- address(P1, N1, S), address(P2, N2, S), number_neighbors(N1, N2). -> [query]. ;; entry queries ?- address(adam, two, high_hill). ;; Does Adam live at 2 High Hill? (4) yes. ?- address(beth, four, turk_hill). ;; Does Beth live at 4 Turk Hill? (4) no. ?- address(lee, N, S). ;; Where does Lee live? (5) N = seven S = turk_hill; no ?- address(P, seven, high_hill). ;; Who lives at 7 High Hill? (5) P = frank ; no. ?- neighbors(cameron, david). ;; Are Cameron and David neighbors? (4) yes. ?- neighbors(cameron, edgar). ;; Are Cameron and Edgar neighbors? (4) no. ?- neighbors(cameron, P). ;; Who are Cameron's neighbors? (5) P = beth; P = david; no ?- neighbors(P, harry). ;; Who are Harry's neighbors? (5) P = greg; P = ivan; no

See address.P

| 1 | Our first set of axioms records where each person lives. Each of these

axioms is the predicate address applied to three atoms (names starting with a

lower-case letters), corresponding to a person, a number, and a street. Note

that Prolog does not require any declaration of predicates or atoms before use;

simply using a predicate or atom in an axiom or rule "teaches" the Prolog system

about it. (On the other hand, a typo in an axiom or rule (e.g., writing

addres(beth, two, high_hill)) will silently be accepted by Prolog.) |

| 2 | Our second set of axioms records which "numbers" are "neighbors". Note that

the axioms explicitly encode the symmetry of the number_neighbor predicate:

both number_neighbor(one,two) and number_neighbor(two,one) are included in

the axioms. |

| 3 | Our first rule establishes when two people are neighbors.

Logically, this rule corresponds to:

\[\forall P_1, P_2. \left(\begin{array}{@{}l@{}} \exists N1, N2, S. \begin{array}[t]{@{}l@{}}\mathsf{address}(P_1, N_1, S) \\ {} \wedge \mathsf{address}(P_2, N_2, S) \\ {} \wedge \mathsf{number\_neighbors}(N_1, N_2) \end{array}\end{array}\right) \Rightarrow \mathsf{neighbors}(P_1, P_2)\]

Alternatively, interpret the rule as saying: For any persons In a rule, each variable must be written as a name starting with an upper-case letter. |

| 4 | Some of our goals are predicates without variables. Such a statement simply

asks Prolog to prove or disprove the statement and uProlog responds with a

simple yes (the goal is provable) or no (the goal is not provable). |

| 5 | More interestingly, some of our goals are predicate with variables. Such a

statement asks Prolog to find all assignments of the variables that make the

statement provable and Prolog responds with each satisfying assignment of the

variables. In the interpreter, the first satisfying assignment is printed and

then the interpreter pauses for user input. If the user enters ;, then the

interpreter searches for the next satisfying assignment, prints it, and pauses

again for user input. When the interpreter cannot find a next satisfying

assignments, then it prints no (think "no more"). At any point, if the user

does not enter ;, then the interpreter stops searching for satisfying

assignments and prints yes. |

Prolog and the Closed-World Assumption

?- [rule]. ;; enter clauses (axioms and rules) -> mortal(X) :- man(X). ;; All men are mortal; a rule. -> man(socrates). ;; Socrates is a man; an axiom. -> [query]. ;; enter queries ?- mortal(socrates). ;; Is Socrates mortal? (1) yes ?- mortal(fluet). ;; Is Fluet mortal? (2) no

See mortal.P

| 1 | Socrates is a man and all men are mortal; therefore, Socrates is mortal. Prolog finds exactly this proof. |

| 2 | (Cue nervous laughter from the audience.) |

What "went wrong"? Nothing: Prolog is behaving exactly as it should, because of

the closed-world assumption: the database (of submitted axioms and rules)

includes everything that is (assumed to be) true. Therefore, Prolog’s yes and

no do not correspond to true and false; rather, they correspond to can be

proven and cannot be proven with respect to the given axioms and rules.

So, in the above, the answer no to the goal mortal(fluet) simply means

"mortal(fluet)fluet.

Acknowledgments

Portions of these notes based upon material by Hossein Hojjat.